Assistant Professor of Computer Science at the Illinois Institute of Technology

Area: Decentralized Machine Learning Systems

Focuses: Federated and Decentralized Learning • Cyber-Physical Systems • AI-for-Science • Complex Networks

Lab: DICE Lab

About

Nathaniel Hudson is an Assistant Professor of Computer Science at the Illinois Institute of Technology in the Department of Computer Science. His research studies the design of systems for serving AI on edge computing infrastructure — i.e., Edge Intelligence (EI) — for smart city applications.

News

| Oct 17, 2025 | Recipient of 'Torch of Excellence' award at the 2025 Lyman T. Johnson Awards Luncheon. |

|---|---|

| Sep 17, 2025 | 'Best Paper' win at the 2025 e-Science conference! |

| Jun 30, 2025 | Research that explores how active learning methods can improve the rate of novel scientific discovery in generative AI workflows has been accepted for publication at the 2025 IEEE e-Science conference. This paper specifically studies the discovery novel metal-organic frameworks (MOFs) in the MOFA workflows presented in an earlier work. |

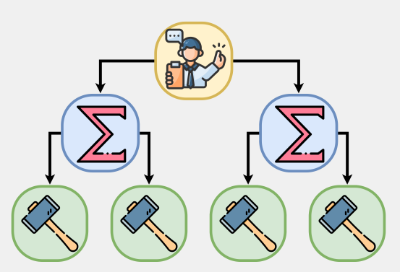

| Jun 26, 2025 | Our paper for Flight, a hierarchical federated learning framework, has been accepted for publication through the Future Generation Computer Systems journal. |

| May 20, 2025 | My former summer undergraduate student mentee, Jordan Pettyjohn, was recently awarded 1st place in the ACM Student Research Competition (SRC) Grand Finals in the graduate competition. This was for his work I worked with him on investigating toxicity ablation in large language models (source, retrieved May 24, 2025). |